Archive for November, 2010

Crepuscule

This shows a video with the effect I want using the mouse – as the mouse scrolls from left to right, the lion walks backwards away from you slowly, until he stops altogether. Then he begins to walk towards you until it gets too blurry to see.

This is a video of images of my mouse and rabbit. The mouse scrolling across the screen achieves the desired effect.

This video shows the circuit for the ultrasonic range finder. At first I was using face detection to determine the distance of the viewer but I decided to use an ultrasonic range finder instead. I think it is a little more accurate at far distances, and sometimes face detection wouldn’t detect me if I had glasses on.

Below, I show the code that I use

(more…)

Big Pixel – process

see here for older Big Pixel progress links.

Jack and I have continued work with our light fixture, aka Byte Light.

We have soldered 50 red, 50 blue, and 50 green 10 mm superbright LED lights each to three plexiglass rods, which will each represent one of the R, G, and B lines in our LCD pixel representation. Each LED needs 20 mA, and between 2-4 V, depending on the color. Therefore, we need an external power supply that goes up to around 3 Amps (when every switch is on). We will encompass each colored LED rod with frosted paper, and encase the three in a square plexiglass box that will shine light downwards on the user, who will be manipulating the 24 switches on the wall.

Our circuit consists of two multiplexors as input from the 24 light switches. Then the arduino takes the binary input and translates it into byte values (0-255) for R, G and B values, and using three transistors, we will alter the brightness of each R, G, and B light rod.

Storyboard – D.A.B.A. girls

David, Nathalie, Aly, and Ariella shot a satyrical comedy about a girl part of the DABA girl community – Dating a Banker, Anonymous. After the crash, she has to relearn how to live with her boyfriend who can no longer buy her Prada shoes.

click here to watch it

(we all pitched in to draw this storyboard)

wall wash pixel from a LCD pixel representation

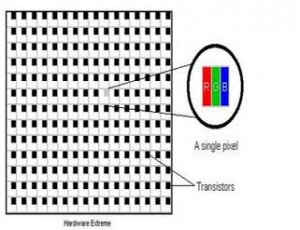

Screen images bombard contemporary society, yet few people understand how these images are composed.

Jack Kalish and I are collaborating to create a light fixture that represents one giant LCD pixel of RGB color. In order to do so, we are going to use bright three rows of LED’s (one red, one green, and one blue). Each LED row will be controlled, and have 255 distinct brightness levels. The LED’s will be mounted perpendicular to the wall, above head level, and create a wall wash effect. As the colors of each LED mix (and each one has a different brightness level), the light emitted represents the color given off by a pixel that is made up of those three color levels.

The level of brightness of each LED row depends on the interaction: We are going to set up a grid of switches that a person can interact with to control the brightness of each of the three LED rows. Each switch that a person can control will represent a bit. As a user plays with the switches, he will be manipulating the digital data manually.

For a typical LCD pixel, each byte of the R, G, and B value are made up of 8 bits, so we will have 24 manipulatable switches. The switches represent either an on or off value (0 or 1), and 8 switches determines a value from 0-255 of each R, G, and B value.

By augmenting the resolution of data (each of 24 light switches represent one byte), and the size of the representative pixel, We hope the experience will be immersive, and through playing and interacting with our switchboard, the user will begin to understand how digital data communicates simple signals.

(We were trying to decide what types of lights to use – at first we thought three fluorescent lights would be good, until we realized that dimming them would pose a problem)

Cochlea

(see project site here)

Cochlea is an interactive sculptural artwork based on the inner ear, which is composed of small hairs, that receive aural input, analyze it based on component frequencies, and send corresponding electrical signals to the brain.

Cochlea is a sculpture, made out of plexiglass, 32 led’s, piezo sensors, ribbon, a microphone, and paint. The cochlea both analyzes the frequency of incoming sound and outputs sound based on what region it is stimulated, either by blowing or touching. Users can “play” the cochlea as if it were a musical instrument, by touching different parts of the coil-shape structure, which trigger synthesized sounds that correspond to the frequencies of aural input received at that region of the cochlea. There is also a microphone on the end of the structure, which analyzes the frequency of incoming sound, and based on the region of the human cochlea that interprets the incoming frequencies, the sculpture lights up, so the viewer can visualize the activity in that region of the organ. The cochlea creates its own loop of incoming sound analysis and sound output.

read on to see photos of our process

ICM midterm

Not what I was going for at all, but below are images from my video capture. Here is the code and the rest of the applet.

Here are some shots:

It’s a stable background, when you move about, you wipe away the background with a video of you, that fades away over time.

What I thought I wanted to work on is a fiery background with you as the water blob in the foreground. or something – or at least a manipulatable background.

For the final…

(more…)

MotorLab

Just a short video of turning a motor on and off with different parameters, using a transistor.

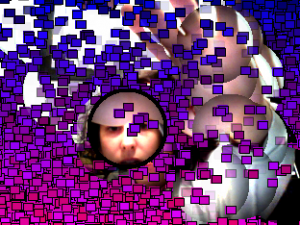

pixelWork

This is a snapshot of my processing HW. Similar to my last assignment but it allows you to look at a video through the a small lens (which is the mouse), and everywhere outside of that circle is a snapshot of the past, that eventually gets obscured by a sea of squares. Here’s the link to the sketch itself and the code.